Governing Third-Party MCP Servers in Claude Code & Cursor

Learn how third-party MCP servers in Claude Code and Cursor create new security risks—and how teams can govern access, data flow and auditability.

Ankita Gupta

Dec 20, 2025

A security team’s guide to governing MCPs in AI IDEs - Claude Code and Cursor

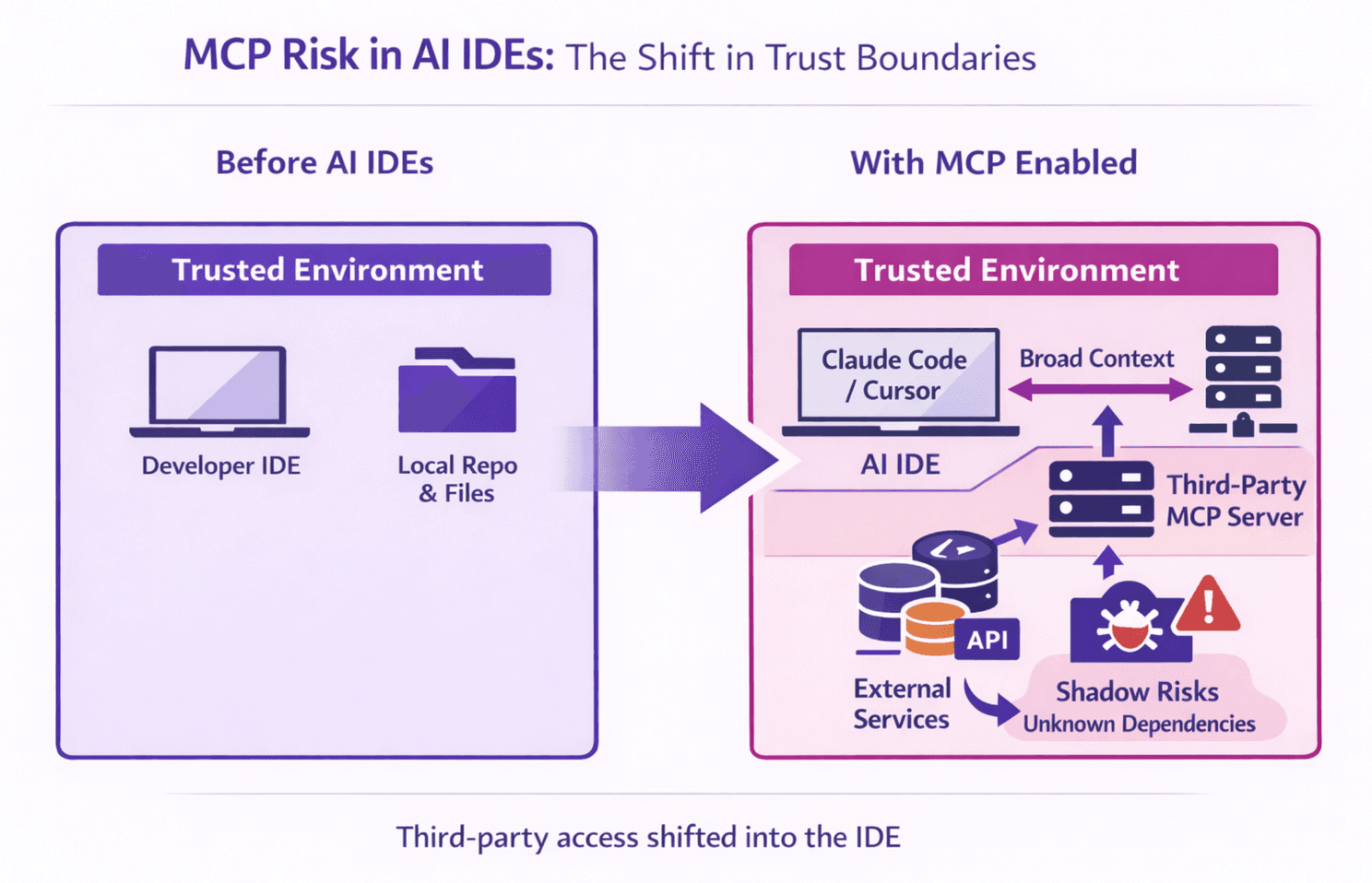

AI IDEs like Claude Code and Cursor are already inside engineering orgs. The problem security teams are trying to solve isn’t “AI-generated code might be buggy.” You already have ways to catch that (review, CI, scanners). The real problem is that third-party MCP servers turn the IDE into a privileged integration surface, and most orgs are adopting that surface without the governance they’d apply to any other third-party integration touching source code.

MCP in AI IDEs: What does it mean?

In Claude Code and Cursor, MCP enables “tools” that the IDE/model can use during development. Those tools can be local or remote and can request context to be useful. In practice, MCP becomes a mechanism for pulling data from the developer environment and returning results that shape what the IDE suggests or changes.

That matters because developer environments contain exactly what attackers want: proprietary source, internal architecture, credentials, and operational details.

Where did the trust boundary move?

Before AI IDEs, most orgs treated the IDE as local and trusted, and third-party risk was mainly in CI/CD or production dependencies. MCP pulls third-party trust into the IDE loop.

What changed: third-party tooling is now participating in “what gets read” and “what gets sent” during development, not just at build or deploy.

The five MCP-specific failure modes security teams should expect

These are not edge cases. If MCP is enabled broadly, you will see some version of these.

1) Accidental “wide context” export of sensitive code

A developer asks: “Refactor this auth flow.” The IDE asks the MCP tool for context. The tool grabs far more than the developer intended - adjacent directories, shared libs, or internal docs and sends it out.

Impact: IP leakage, architecture exposure, security control disclosure.

2) Secrets exposure via implicit context, not copy/paste

Secrets leak through .env, configuration files, logs, stack traces, test fixtures, or “sample configs” that contain real values. MCP tools often request “project context” and receive these files incidentally.

Impact: credential compromise, lateral movement, and incident response chaos.

3) Shadow integration risk

Developers adopt a popular third-party MCP hosted by an individual or small company. That MCP logs data, forwards to another API, or stores requests for debugging. Security has no contractual protections or visibility into retention.

Impact: uncontrolled third-party data handling and retention.

4) Transitive risk chains that are invisible to SBOMs

Even if your repo is clean, an MCP tool can call other services. It becomes a runtime dependency chain outside your inventory systems.

Impact: hidden data processors, policy violations, compliance gaps.

5) Blind spots

When something goes wrong (leak, breach, or policy investigation), you can’t answer: Which MCP server? Which repo? Which files? What was sent? When?

Impact: worst-case assumptions, painful incident response, unclear disclosure scope.

The “data leaves before your controls see it” problem

Most AppSec controls are downstream. They activate after code exists as a diff, after it is committed, or after it enters CI.

MCP-related risk happens earlier, while context is being assembled and shared. By the time a pull request exists, sensitive data may already have left the environment.

This is the core governance insight: if you only control CI and review, you are controlling the wrong stage of the lifecycle.

What “good” looks like for MCP governance inside IDEs?

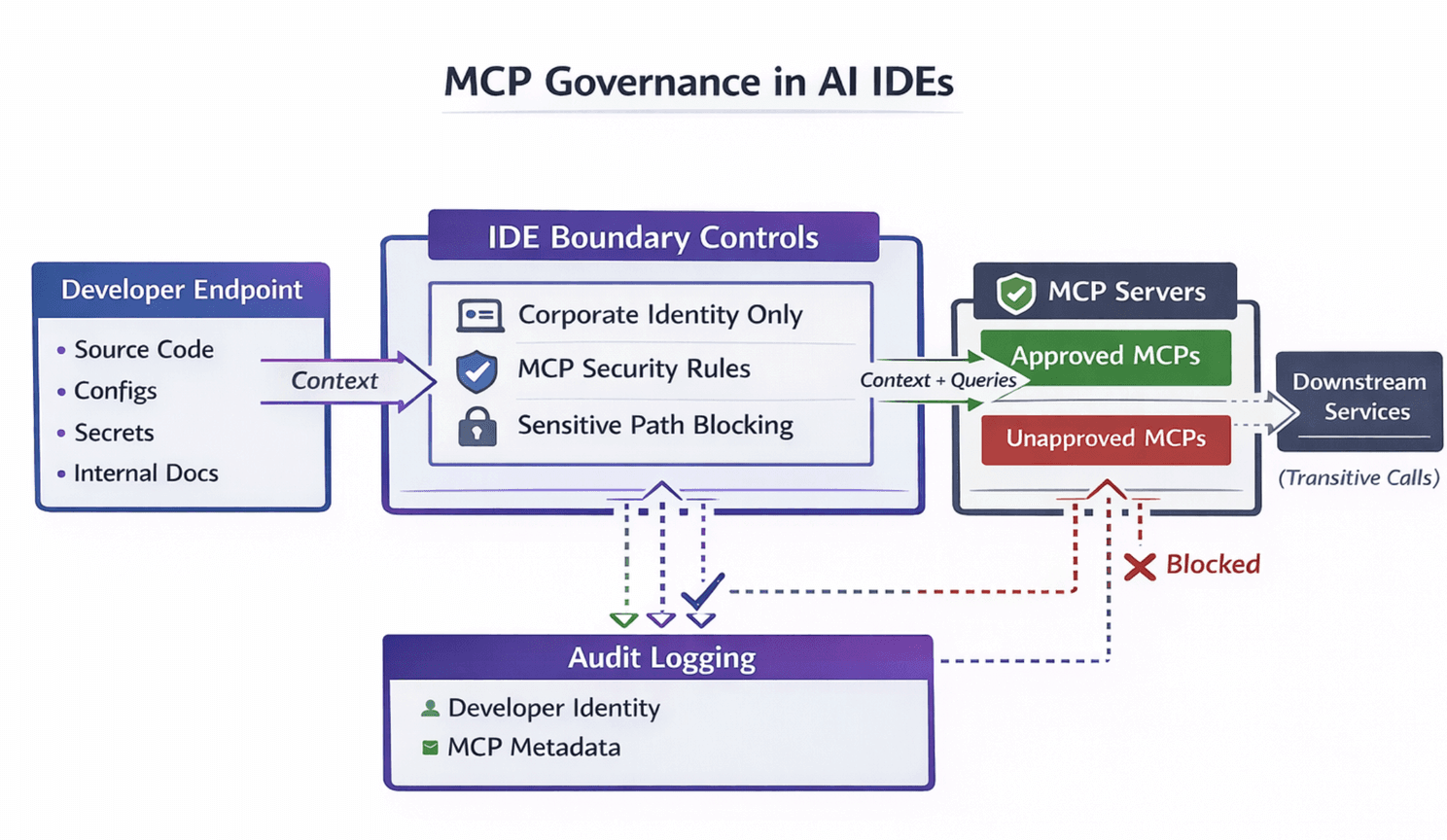

Treat third-party MCP servers like you would treat GitHub Apps with repo read scopes, CI plugins, or endpoint agents. That leads to three non-negotiables:

Inventory: know which IDEs and MCP servers are being used

Policy: define what is allowed, by whom, in which repos

Enforcement + audit: Ensure policy and guardrails are enforced

Control points that actually work (and where they sit)

Key idea: You need at least one control on the endpoint or IDE boundary, plus egress controls, plus audit. If any of those are missing, the policy will be bypassed in practice.

An MCP Governance policy model

MCP Governance model for AI IDEs

MCP Governance Policy rule 1: “Corporate identity only.”

Developers must use corporate accounts/keys for AI IDEs and MCP access. This prevents the “personal token + unknown endpoint” problem that destroys auditability.

MCP Governance Policy rule 2: “Approved MCP allowlist.”

Maintain a short list of approved MCP servers. Everything else is blocked or requires a time-boxed exception.

What “approved” should mean (minimum):

Clear owner and purpose

Hosting details (where it runs)

Data handling statement (retention, logging)

Update/patch process

Scope/capability summary (what it reads/does)

MCP Governance Policy rule 3: “Sensitive paths are never shared.”

Even before you have perfect tooling, you can define a denylist of paths and patterns that must never leave endpoints (e.g., .env*, /secrets/, /prod/, customer export folders, credential stores). If your controls can’t enforce this yet, don’t pretend the risk is addressed.

MCP Governance Policy rule 4: “High-risk repos require stricter mode.”

For repos that contain auth, payments, security controls, infra, or regulated data handling, require stricter MCP posture: either no third-party MCP, or only org-hosted MCP servers.

MCP Governance Policy rule 5: “Audit is mandatory.”

At minimum, collect:

Developer identity + device identity

IDE type/version

MCP server identifier + endpoint

Repo identifier

Timestamped session metadata. You don’t need to log every token; you need enough to reconstruct the scope during incident response.

Final Thoughts

Claude Code and Cursor now function as MCP integration platforms operating inside trusted developer endpoints. This fundamentally changes the risk model. Third-party MCP servers can read context, influence code, and move data before traditional security controls ever engage. That places them squarely within the security perimeter.

MCP turns AI IDEs into a new class of privileged integration point and any system with that level of access must be governed accordingly, with the same rigor applied to source-code-level integrations.

More Readings:

Experience enterprise-grade Agentic Security solution