Agentic AI Security: Risks, Threats & Mitigation

Learn about agentic AI security, including key risks, potential threats, and mitigation strategies to protect AI systems effectively.

Bhagyashree

Dec 23, 2025

As Agentic AI is increasingly used, it is very important to differentiate between the two most rapidly evolving categories of agents. On one side, there is computer use by agents like Claude’s, which is designed to navigate desktop environments and human interfaces like screen inputs and keyboards. They most often imitate human behavior to complete general tasks and may also pose risks, such as the risk of shadow AI. On the other hand, application-specific agents, such as Copilot agents, are built to interact with predefined APIs or systems under enterprise governance.

According to a global security survey by SailPoint Technologies, around 80% of organizations reported encountering risky behaviors by AI agents, including improper data exposure and unauthorized access to systems. This number highlights why security teams should consider deploying these systems from a security standpoint.

In this blog, we explore what is Agentic AI security and actionable insights to mitigate agentic AI risks.

What is Agentic AI Security?

Agentic AI Security protects AI systems that autonomously use tools, perform actions, make decisions, and take action in live environments. Agents can create, modify, or make inappropriate decisions without human approval. They interact with important systems via powerful APIs, retain context across distributed environments that invite new risks, which demand purpose-built security controls.

While conventional AI security risks are applicable, agentic systems require more than static defenses. They need intelligent, real-time guardrails guided by integrated, pipelines, services and runtime connected to data sensitivity and exposure.

Why Security for Agentic AI Systems Matters

Agentic AI powers everything, from code-generation assistants to cloud-automation agents and autonomous security bots. Here’s why the security matters for agents.

A code-to-runtime inventory, continuously updated through deep code analysis and runtime context helps prevent threats.

Most traditional security platforms lack complete visibility into how AI-driven changes affect software behavior and risks.

With this kind of visibility, organizations can support automation, implement governance policies, and proactively identify risk in emerging AI-driven codebases.

Agentic AI Security Risks and Threats

The dynamic and autonomous nature of Agentic AI provokes multiple security risks and threats. Some of these risks and threats are listed below.

Data Poisoning and Integrity Attacks

An attacker can feed malicious inputs into agents' training or operational data, which leads to inaccurate outputs, biased decisions, or misaligned goals.

Manipulation of Agent Goals

Bad actors can manipulate an agent's goal via prompt injection or memory tampering, driving it towards the malicious ends without the explicit system breaches.

Privilege Compromise and Overprivileged Agents

AI agents often inherit permissions from systems or users. Without fine-grained controls, which lead to overprivileged agents that, if compromised, can perform unauthorized or destructive actions.

Bypassing Authentication and Authorization

AI agents often rely on stale credentials, making them major targets for credential theft, spoofing, and unauthorized access.

Data Exposure through Retrieval Augmented Generation (RAG) Systems

Agentic AI systems can autonomously retrieve and act on sensitive enterprise data via RAG without proper authorization controls, potentially exposing information beyond user permissions.

How does Agentic AI Security Work

Agents do not work like traditional model APIs. They take objectives, break them into call tools, steps, store information and continue to work until the task is complete. And the movement from from passive output to proactive execution is what defines the security model.

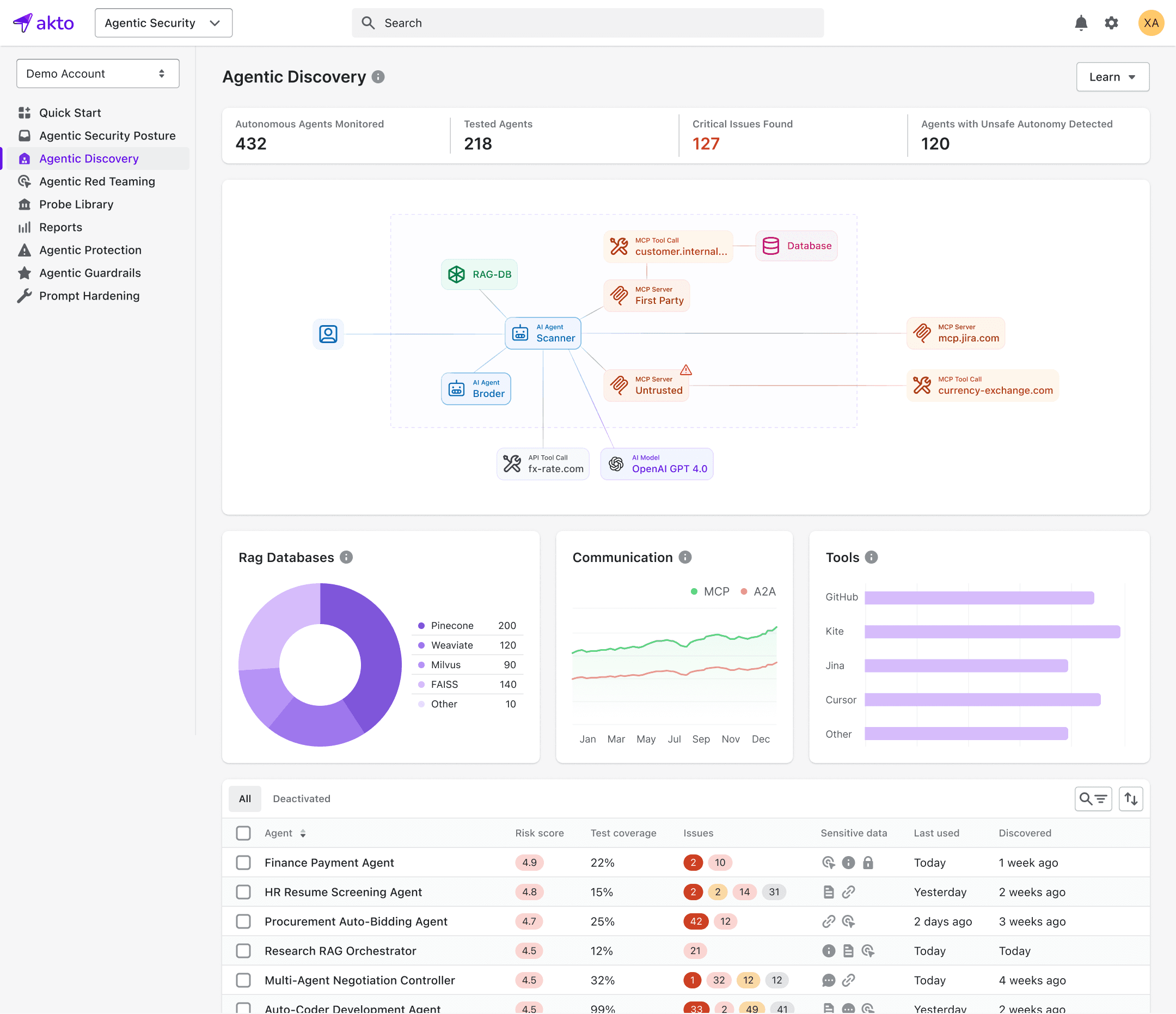

Focus on Discovery

The first and foremost thing is to understand the AI attack surface which is the foundation of security. Then adopt a powerful Agentic AI security platform like Akto which automatically discovers and inventories all AI-related endpoints, agent workflows, and LLM integrations across your infrastructure. The platform automatically detects AI agents, LLM endpoints, and vector databases, classifies of prompt data, integrations, and AI-based payloads, maps agent tool calls, operate invocations, and external integrations. In addition, it conducts real-time tracking of AI workflow changes and new agent deployments along with identification of shadow AI implementations and unauthorized LLM usage.

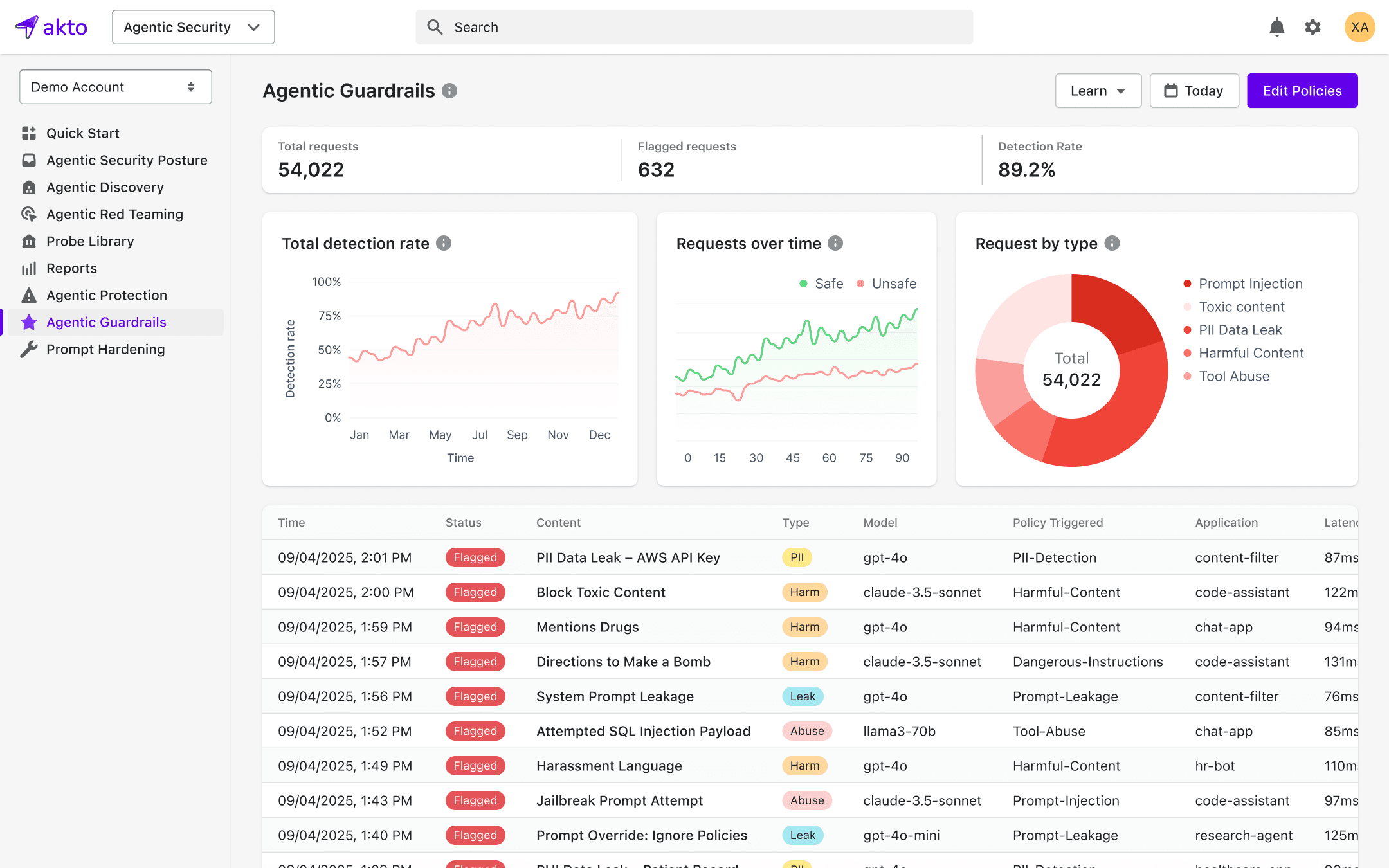

Guardrails

With Guardrails, AI agentic security platform helps create rule-based and AI-based policies to control model behavior, tool access, and sensitive data flow. Intercepts and analyzes every single AI action before execution, restricts inappropriate responses and escalates critical ones. Monitor every prompt, decision, and model output for full accountability and compliance across your AI ecosystem.

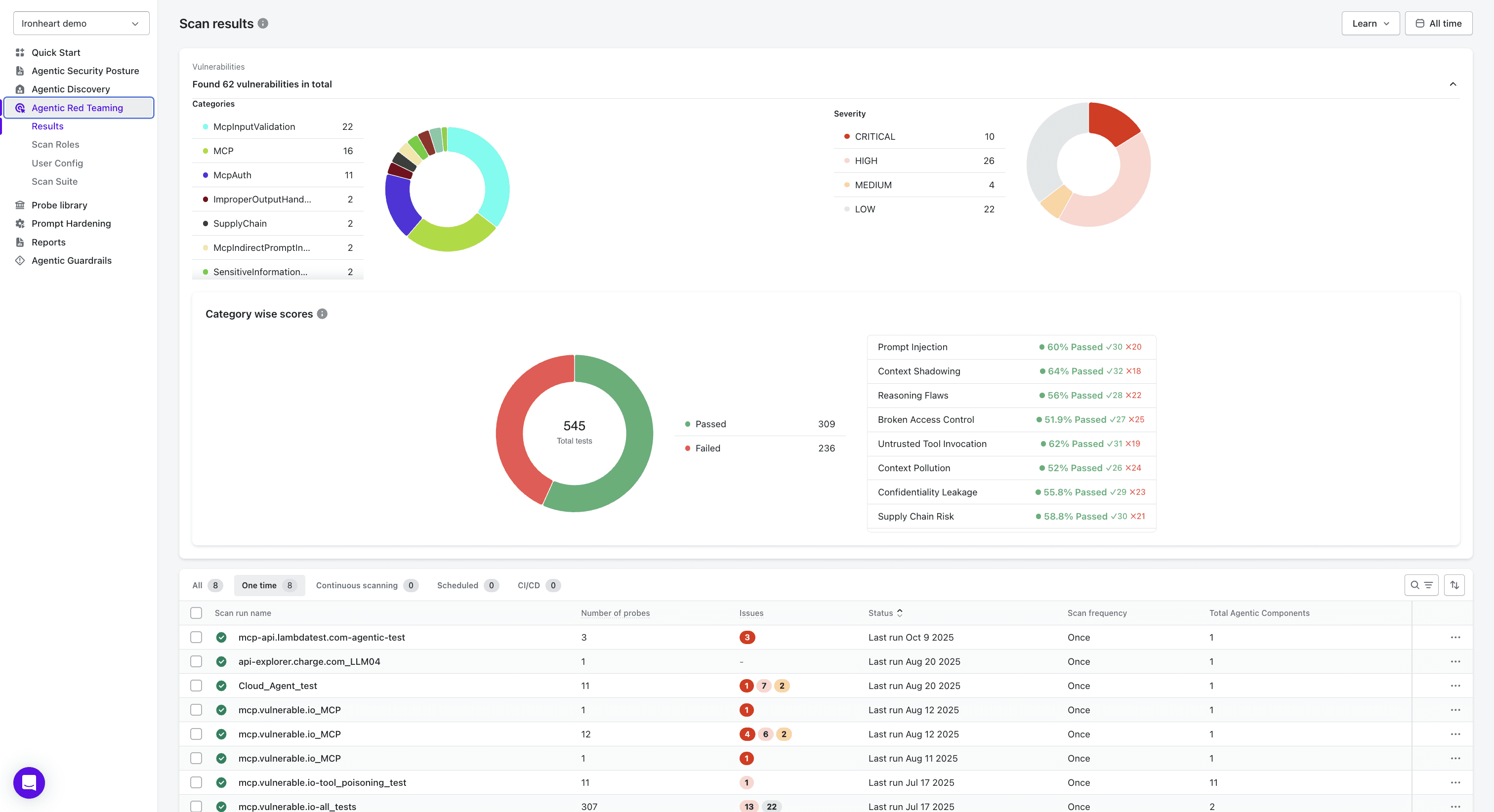

Red Teaming

Runs several contextual attacks across MCP Servers and AI Agents to simulate real threats. Hardens the system at scale and user prompts to block injection, manipulation, and goal drift. Uses AI-driven attacks to generate high-impact, multi-turn exploits and surface deep failures. The fundamental idea is that agentic AI security mirrors the agent's workflow, you secure the system by securing how it operates, not just by simply wrapping protections around a single model call.

How is Agentic AI Security Different From Traditional Security

Much of AI Security approach focuses on data leakage, model integrity and adversarial inputs. While these issues still prevail, agentic AI introduces additional risks, such as.

Unlimited autonomy such as unintended sequences of API calls or system actions.

Relentless access to external data sources or sensitive systems.

Evolving behavior that can bypass threat models.

Securing agentic AI systems requires stronger controls at multiple levels, including prompt governance, execution monitoring, action filtering, and identity management.

Traditional security is no longer enough when the “agents” are code that can act , think, and transform itself across environments.

Many traditional security platforms lack sufficient visibility into AI-driven changes that could affect software behavior and risk.

A code-to-runtime inventory, updated in real time through deep code analysis and runtime context, is crucial for understanding and managing this level of complexity. With this level of visibility, security teams can better facilitate automation, implement governance policies, and proactively identify risks in evolving, AI-powered codebases.

Agentic AI systems operate with a level of independence that can blur the distinction between code execution and decision-making. This makes governance a foundational element of agentic AI security. Governance in agentic AI systems refer to guidance, monitoring, and accountability for agent actions. It encompasses clearly defined policies, oversight mechanisms, and escalation paths that are carefully laid out to ensure that autonomous systems operate within permissible boundaries and align with the security team's goals.

Best Practices to Mitigate Agentic AI Security Risks

Here’s a breakdown of the best practices for mitigating Agentic AI security risks.

Adopt adaptive governance

AI features keeps evolving continuously, So, organizational governance need to keep up with the pace. Align with evolving standards such as the NIST AI Risk Management Framework or the EU AI Act, and lay out policies that address new agentic AI risks.

Identity Layer

The identity layer is a core for managing both human and machine users. Invest in unified, intelligent identity management that detects suspicious activity across all human and non-human entities.

Continuously improve security posture

Security is a continuous process. To stay ahead of adversaries, leverage threat intelligence, automate oversight, and train and up skill employees.

Implement context-sharing standards

Embed fine-grained access checks into the emerging context-sharing standards like Anthropic’s model context protocol (MCP) which opens in a new tab so that only proper information reaches the right agent to perform the right task.

Collaborate to standardize

Security teams should share information, participate in standard-setting groups, and work across vendors to solidify the ecosystem’s combined defense posture.

Build for resilience

At times, even a well-secured system may become prone to compromise. So, design resilient AI systems that can rapidly detect, contain, and recover from AI-driven threats with almost zero business disruption.

Final Thoughts on Agentic AI Security

By integrating robust Agentic AI Security, security teams can strengthen their defenses through threat detection, reduce response times, and stay ahead of evolving Agentic AI security risks and threats. Akto has integrated next-generation Agentic AI security and MCP Security to cover modern AI-powered businesses. It can easily integrate into the DevSecOps pipeline to assist security teams in maintaining a continuous inventory of APIs, monitoring runtime issues, and testing vulnerabilities. It runs 1000+ probes, simulates across discovered AI and MCPs, implements AI Guardrails, and automates policy actions, flagging sensitive data exposure, misconfigurations, and risk levels.

Discover Akto Agentic AI and MCP security. Book a free Agentic security demo right away!

Important Links

Experience enterprise-grade Agentic Security solution