What is Shadow AI?

Learn what Shadow AI is, how it differs from Shadow IT, key enterprise risks, real-world examples, and how to govern AI usage safely with visibility and control.

Ankita Gupta

Jan 12, 2026

A practical, enterprise-grade guide for AI Security Leaders.

Shadow AI refers to the use of generative AI tools, AI agents, AI IDEs, browser extensions, or AI-powered SaaS applications without formal approval, visibility, or governance by IT or security teams.

Just like Shadow IT emerged when employees started using unsanctioned cloud apps, Shadow AI is now emerging because AI tools are:

Extremely easy to adopt

Embedded directly into workflows (IDEs, browsers, SaaS apps)

Often used with sensitive enterprise data

The difference is scale and speed. Shadow AI spreads faster, acts autonomously, and can make decisions or take actions, not just store data.

This shift is exactly why modern enterprises need a dedicated governance layer for AI usage one that provides visibility and control across AI tools, agents, and workflows without slowing teams down. This is the problem Akto is built to solve.

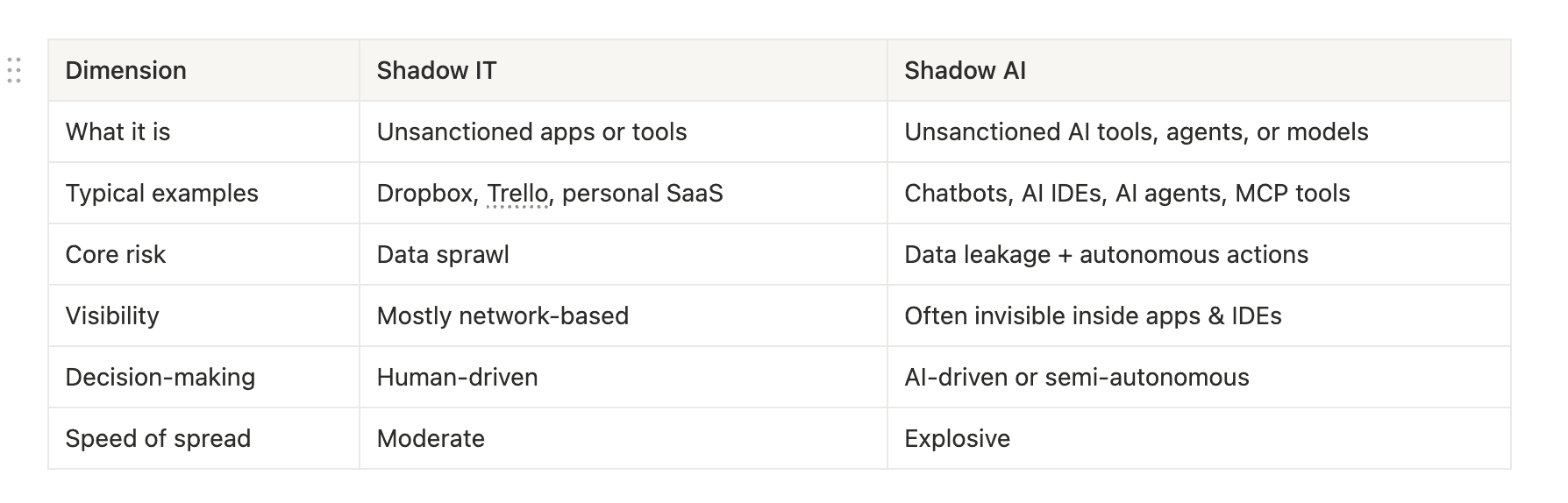

Shadow IT versus shadow AI

Shadow IT and Shadow AI are often mentioned together, but they represent very different challenges. Shadow IT typically refers to employees using unapproved software or cloud applications to move faster, such as file-sharing tools or productivity apps. The primary risk in these cases is loss of visibility into where data is stored and who has access to it.

Shadow AI goes several steps further. Instead of just storing or moving data, AI tools can interpret information, generate new content, and in many cases take actions on behalf of users. This introduces risks that go beyond data sprawl, including loss of intellectual property, unintended data retention, and autonomous system behavior that operates outside human review.

Shadow IT versus Shadow AI

Another key difference is visibility. Shadow IT is often detectable through network traffic, access logs, or SaaS discovery tools. Shadow AI, however, operates inside IDEs, browsers, embedded SaaS features, and AI agents, where traditional security controls have limited reach.

Most importantly, Shadow IT is human-driven, while Shadow AI introduces autonomy. Decisions, actions, and workflows can be executed by AI systems at machine speed, changing the nature of risk from simple tool misuse to uncontrolled behavior. This shift makes Shadow AI a fundamentally new governance and security problem, not just an evolution of Shadow IT.

Key shift: Shadow AI doesn’t just store or move data, it can reason, generate, decide, and act.

What are the key risks of shadow AI?

Shadow AI introduces new classes of risk that traditional IT controls were never designed to handle:

Sensitive data exposure Shadow AI makes it easy for employees to share sensitive data with AI tools as part of everyday work. Source code, credentials, customer data, and internal documents often end up inside prompts running through IDEs, browsers, and agents. Because this data is shared as unstructured text and not traditional files, most security controls fail to detect or log the exposure, leading to continuous and low-visibility data leakage.

Unintended data retention & training Many GenAI tools retain prompts and context by default, and in some cases use them for quality checks or model improvement. Once data leaves the organization, teams often lose the ability to enforce retention, deletion, or residency requirements, creating long-term governance risk.

Loss of IP and proprietary logic Shadow AI exposes how a business operates, not just what data it owns. Internal algorithms, workflows, prompts, and system designs are frequently shared with AI tools, resulting in cumulative intellectual property erosion and loss of competitive advantage over time.

Autonomous or semi-autonomous actions Modern AI tools can take actions such as calling APIs, modifying data, or triggering workflows without explicit human review. This breaks traditional security assumptions and increases the risk of unintended changes, misuse, or cascading failures.

Compliance and regulatory exposure Shadow AI operates outside formal governance, making it difficult to meet requirements under SOC 2, ISO 27001, GDPR, HIPAA, and similar frameworks. Organizations often lack visibility into AI usage, data sharing, and policy enforcement, leading to audit and regulatory failures.

Security blind spots The most critical risk of Shadow AI is invisibility. AI usage happens inside IDEs, browsers, SaaS apps, and agents where traditional security tools have limited reach. Without visibility into AI behavior, data access, and actions, security teams cannot assess risk, enforce policy, or respond effectively. This lack of visibility is why organizations are turning to AI-aware security platforms like Akto, which provide real-time insight into AI usage, data flows, and agent actions across IDEs, browsers, and Gen AI applications.

What are some of the causes of shadow AI?

Shadow AI emerges not because employees want to bypass security, but because AI adoption has outpaced enterprise governance. The tools are easy to use, deeply embedded into daily workflows, and often deliver immediate productivity gains.

Key causes include:

Pressure to move faster Employees are expected to ship, respond, and decide quickly. AI tools offer instant leverage, making them hard to ignore even without formal approval.

AI embedded everywhere Generative AI is now built into IDEs, browsers, productivity suites, and SaaS platforms. Adoption requires no procurement, no install, and often no awareness that AI is even running.

Lack of clear AI usage policies Many organizations still lack clear guidance on which AI tools are approved, what data can be shared, and how AI-generated actions should be governed.

Traditional security controls don’t apply Existing controls were designed for SaaS apps and infrastructure, not probabilistic systems that operate through natural language and autonomous agents.

Bottom-up adoption outpacing IT Teams adopt AI organically at the edge of the organization, while security and IT teams struggle to gain visibility after the fact.

Examples of shadow AI

In real environments, Shadow AI rarely appears as an obvious policy violation. Instead, it quietly embeds itself into everyday workflows where productivity gains matter more than governance.

Common real-world examples include:

Developers using Cursor, GitHub Copilot, or other AI-powered IDEs that have direct access to private repositories, internal libraries, and proprietary code.

Employees pasting internal documents, customer information, support tickets, or strategic notes into ChatGPT or similar GenAI tools to summarize, rewrite, or analyze content

Marketing, sales, and support teams using AI tools that connect directly to CRM systems, email platforms, or customer data sources without security review

AI agents or copilots calling internal APIs through plugins, integrations, or MCP servers, often with broad permissions and little visibility into what actions are being taken

Browser extensions that read page content, emails, dashboards, or tickets and automatically send that data to third-party AI models for analysis or response generation

In most cases, these activities operate outside formal approval processes and security monitoring. As a result, security teams often have no clear visibility into which AI tools are in use, what data they access, or how AI-driven actions are affecting internal systems.

What is Shadow GPT?

Shadow GPT refers to employees using tools like ChatGPT for work without security or IT approval. This often involves pasting internal documents, code, or customer data into GPT tools to speed up everyday tasks.

Because these interactions happen through conversational prompts and outside formal controls, security teams typically have no visibility into what data is shared, how it is retained, or how outputs are used. Shadow GPT is often the first and most common form of Shadow AI inside organizations.

How to manage the risks of shadow AI

Managing Shadow AI does not mean banning AI. In practice, bans fail quickly and often push adoption further underground, making the risk harder to detect and control. Employees will continue to use AI tools because the productivity benefits are too significant to ignore.

Effective Shadow AI management focuses on visibility, governance, and guardrails, rather than restriction. The goal is not to slow teams down, but to ensure AI is used in a way that is safe, auditable, and aligned with enterprise risk and compliance requirements.

Key principles for managing Shadow AI include:

Assume AI usage already exists across teams, tools, and workflows, even if it has not been formally approved

Govern how AI is used, not whether it is used, by defining clear boundaries around data access and actions

Prioritize runtime visibility over static approvals, since AI behavior changes dynamically and cannot be secured through one-time reviews

Enable safe and approved AI usage paths, so secure usage is easier than insecure workarounds

Organizations that approach Shadow AI this way are able to preserve productivity while regaining control, visibility, and accountability over how AI operates inside the business.

How to protect against shadow AI in 5 steps?

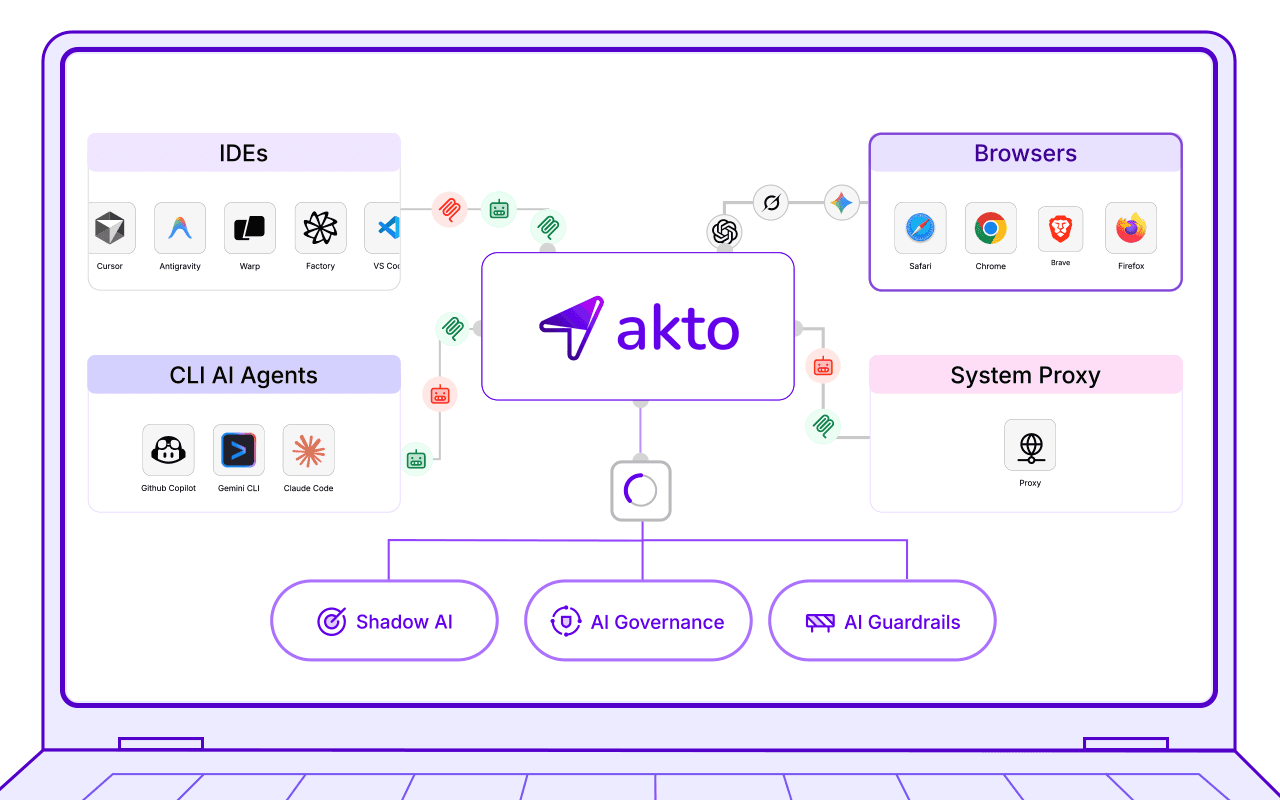

Step 1: Discover Agentic AI usage everywhere

Start by gaining visibility into where AI is already being used across the organization. This includes:

IDEs

Browsers and extensions

SaaS applications

AI agents and MCP connections

Shadow AI cannot be managed if it is invisible. Discovery is the foundation for every control that follows.

These are exactly the environments Akto monitors from AI IDE usage and browser-based GenAI tools to MCP servers and autonomous agents giving security teams visibility where they traditionally have none.

Shadow AI for Employee AI usage

Step 2: Map AI data flows

Once AI usage is visible, understand how data moves through these tools:

What data is sent into AI systems

Where AI outputs are stored or reused

Which internal or external systems are accessed

This step is critical for compliance, audit readiness, and incident response.

Step 3: Define AI governance policies

Establish clear, enforceable policies that define:

Which Agentic AI tools are approved

What types of data can be shared with AI

When human review is required

What actions AI agents are allowed to take

Step 4: Apply runtime guardrails

Static reviews are not enough for dynamic Agentic AI. Organizations need runtime controls that:

Monitor Agentic AI behavior in real time

Enforce prompt, data, and action policies

Detect risky, anomalous, or unexpected behavior

Guardrails is where AI governance becomes operational.

Step 5: Enable safe adoption

The goal is safe acceleration, not slowdown. Security programs should:

Provide employees with approved AI usage paths

Make secure usage easier than insecure workarounds

Continuously evolve policies as AI tools and use cases change

When done correctly, this approach allows organizations to move fast with AI while maintaining visibility, control, and trust.

Final Thoughts on Shadow AI

Shadow AI is already present in most organizations, driven by rapid, bottom-up adoption of powerful AI tools. The real risk is not AI itself, but the lack of visibility, governance, and control around how it is used.

Unlike Shadow IT, Shadow AI can reason, generate, and act autonomously, changing the nature of enterprise risk. Organizations that succeed will not block AI, but will focus on discovery, runtime guardrails, and safe enablement to balance innovation with security.

Shadow AI is ultimately a governance problem. Addressing it early allows enterprises to scale AI with confidence, not fear.

Useful links and Readings:

Experience enterprise-grade Agentic Security solution