AI Risk Assessment: Frameworks, Steps & Best Practices

Learn how AI risk assessment helps organizations identify, prioritize, and mitigate AI security and governance risks.

Bhagyashree

Feb 3, 2026

Just like so many other technological leap, one main aspect of challenges posed by AI is to understand and quantify the risks faced by AI. For many security teams that are looking to utilize the potential of AI, and understand risks or threats associated with AI and is just not a matter of regulatory compliance but of strategic importance. How the security teams carry on this journey will have an lasting impact on business operations, finance and its reputation.

This blog explores what is AI Risk Assessment and lays down steps for effective AI Risk Assessment Framework.

What is an AI Risk Assessment

AI Risk Assessment refers to systematic process of identifying and analyzing risks that is linked to the use of AI technologies. It is very effective when implemented through a formal framework that supports consistent governance and evaluation.

This assessment must cover both internal AI systems and third party vendors to ensure alignment with ethical standards, privacy policies and company values. The most common risks include bias in algorithm, lack of transparency in model training and non-compliance with AI governance requirements.

Why does AI Risk Assessment Matter

Here is why AI risk assessment matter:

AI Risk Assessment matter exposes organizations to large scale failures as even few AI flaws can multiply across millions of automated decisions.

Real world incidents such as biased algorithm, AI jailbreak and data leaks shows how insufficient AI governance results in discrimination, security breaches and safety violence.

Poorly governed AI eliminates trust and credibility with partners, investors and customers which affects long-term business value.

AI Risk Assessment Frameworks

The presence many frameworks indicates continuous global dialogue on how to manage AI risks, that reflects the nature of the issues. These AI risk assessment frameworks share the common objective to efficiently handle AI risks, some may differ in multiple main aspects as explained in the table below.

NIST AI Risk Management Framework

NIST offers guidance for security teams that is focused on practical risk assessment across the AI lifecycle.

It provides flexible framework for risk assessment without clear cut categorization.

Implements structured but adaptable process.

The regulatory nature is non-regulatory and voluntary guidance.

Priortizes the involvement of stakeholders.

The framework includes security as part of overall risk management.

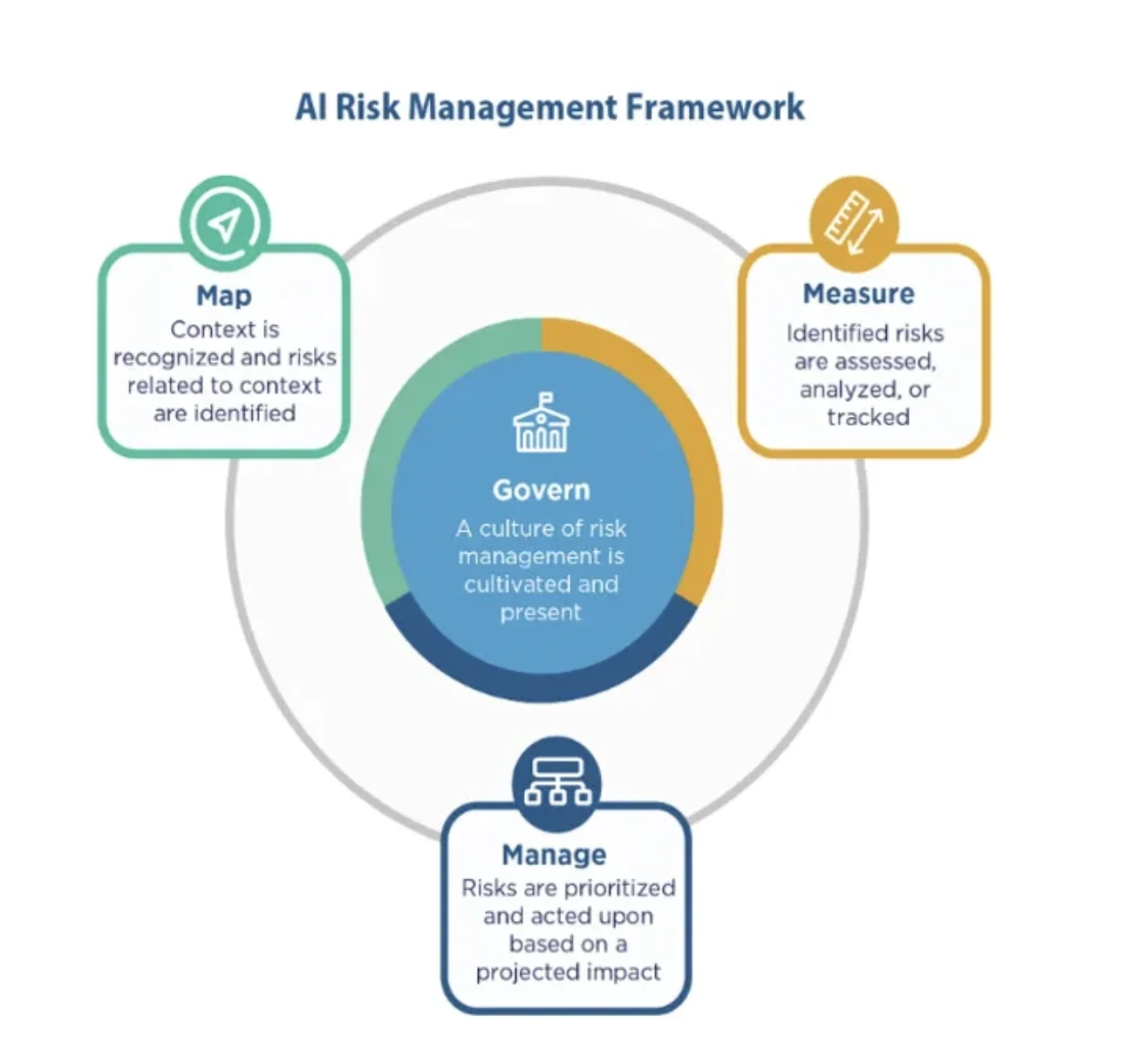

For example, the NIST AI framework defines 4 main interconnect functions designed to promote trustworthy AI.

Govern

Map

Measure

Manage

The framework then goes on to defines many categories for each of the 4 functions and then multiple sub-categories under each category. Each instance offers more examples of the features for security teams to manage AI development effectively and appropriately.

Image source: Medium

EU AI Act

This law is aimed at on securing the citizens of EU and their fundamental rights.

Clear cut risk categorization.

Implements recommended specific requirements based on risk level.

It has regulatory framework with legal implications.

Consists of various stakeholders in the regulatory process.

Includes security requirements, specifically for high impact AI systems.

MITRE

Regulatory framework suggestion and security threat matrix for AI systems.

Full categorization of AI security threats.

Provides regulatory approaches and offers detailed security implement guidance.

Suggests regulatory framework but not a regulation itself.

Promotes collaboration between government and industry.

Focuses on AI security threats and remedial measures.

Google SAIF

Provides security framework for building and deploying AI.

Implied classification risks across development, deployment, execution, and monitoring phases.

Implements practical, step-by-step approach across 4 key pillars.

Non-regulatory best practices framework.

Focuses on implementation on organization.

Centered entirely on AI security throughout the lifecycle.

Security teams may need to integrate aspects from multiple AI risk management frameworks to create a systematic and comprehensive approach specifically designed to their needs and regulatory environments.

Steps of AI Risk Assessment Framework

Here’s a breakdown of 5 steps to conduct AI Risk assessment framework:

Step 1: Clearly Define the Scope

Define what the AI systems are being assessed, why they are important and who is responsible. A clear scope focuses efforts in high-risk systems, prevents wasted resources and sets up accountability across governance, technical and operational stakeholders.

Step 2: Inventory AI Systems and Risks

Create a centralized inventory of all AI systems that document goals, data sources, ownership, lifecycle stage and dependencies. This visibility assist in finding hidden risks, focus high risk systems and create a solid foundation for AI governance.

Step 3: Mitigate Risks by Priority

Mitigate risks in order of high impact by assigning teams, mitigation plans and timelines. Add controls like access restrictions, audits, monitoring and encryptions while tracking progress through risk and performance indicators.

Step 4: Rank Risks

Analyze and focus risks based on the probability and impact including ethical and consequences related to reputation. Risk scoring and matrices can help identify issues exceed risk tolerance and guide resource allocation towards most critical AI vulnerabilities.

Step 5: Continuously Monitor

Implement continuous monitoring to find model drift, security threats, data exposure and bias. The continuous oversight lets risks to be found at the earliest, support governance and maintains long-term compliance, resilience and trust in AI systems.

Final Thoughts on AI Risk Assessment

Overall, implementing AI Risk Assessment framework lets security teams to implement structured approach to cybersecurity that improves the ability to predict, detect, and respond to adversary behaviors effectively and stay ahead to prevent organizations from future threats and vulnerabilities.

Akto's real-time threat detection and blocking capabilities ensure that AI Agents remain protected throughout their lifecycle. With these groundbreaking features, Akto is paving the way for new generation AI Agent security solutions to assist security teams ability to detect, prevent, and respond to API-related threats.

Book a demo right away to explore more on Akto's Agentic AI security and MCP security.

Important Links

Experience enterprise-grade Agentic Security solution