7 Best AI Security Frameworks for Enterprises

Explore 7 top AI security frameworks from NIST, MITRE, OWASP, Google, Cisco, CSA, and Databricks to strengthen your enterprise AI security program.

Ankita Gupta

Feb 17, 2026

As we were reviewing AI security approaches with customers, one thing became clear very quickly: AI security is no longer just “OWASP Top 10 + some guardrails.”

Over the past year, the ecosystem has produced a meaningful number of AI security frameworks each addressing different layers of the problem. Governance, adversarial threats, application vulnerabilities, platform controls, lifecycle security, and now agentic behavior are being tackled independently, but thoughtfully.

Importantly, these frameworks are not redundant. Most were created to solve different problems, for different audiences, at different layers of the AI stack.

This post reviews nine influential AI security frameworks from MITRE, National Institute of Standards and Technology (NIST) , Cisco , OWASP® Foundation , Databricks , Google , and the Cloud Security Alliance, and concludes with a practical way security teams can combine them into one coherent enterprise AI security program.

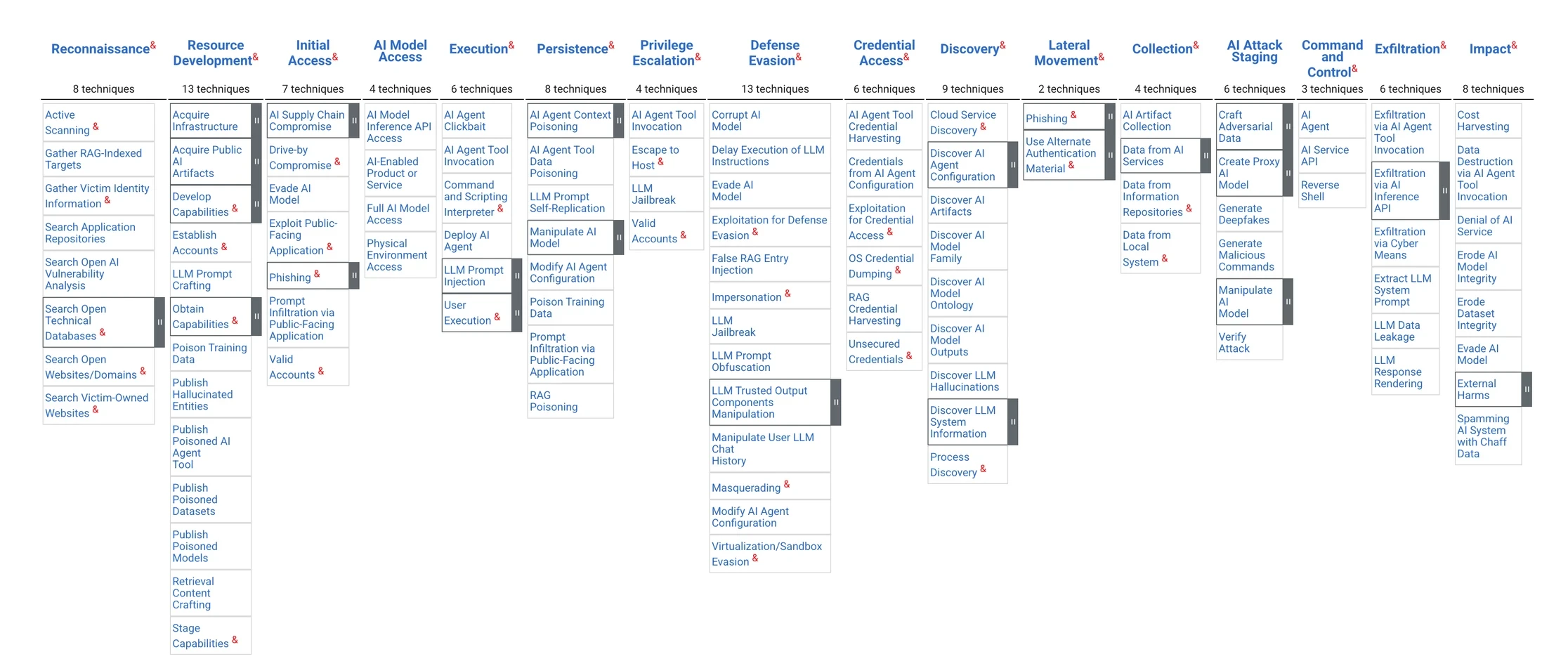

1) MITRE ATLAS (Adversarial Threat Landscape for AI Systems)

What it is: A community-driven threat knowledge base for AI/ML attacks conceptually similar to ATT&CK, but for AI.

Core focus: Adversarial threat modeling: how attackers manipulate models, data, and inference behavior (e.g., evasion, poisoning, extraction).

Key components: ATLAS organizes threats into tactics (attacker goals) and techniques (how they do it), supported by examples/case studies.

Why Security teams use it:

A common language for AI attack paths

Strong foundation for AI red teaming, detection hypotheses, and threat modeling

Useful for aligning internal security teams and vendors on “what attacks matter.”

Gap: It is a threat taxonomy, not an operating model. It doesn’t tell you how to build governance, implement controls, or run a risk program. Pair it with NIST + control frameworks.

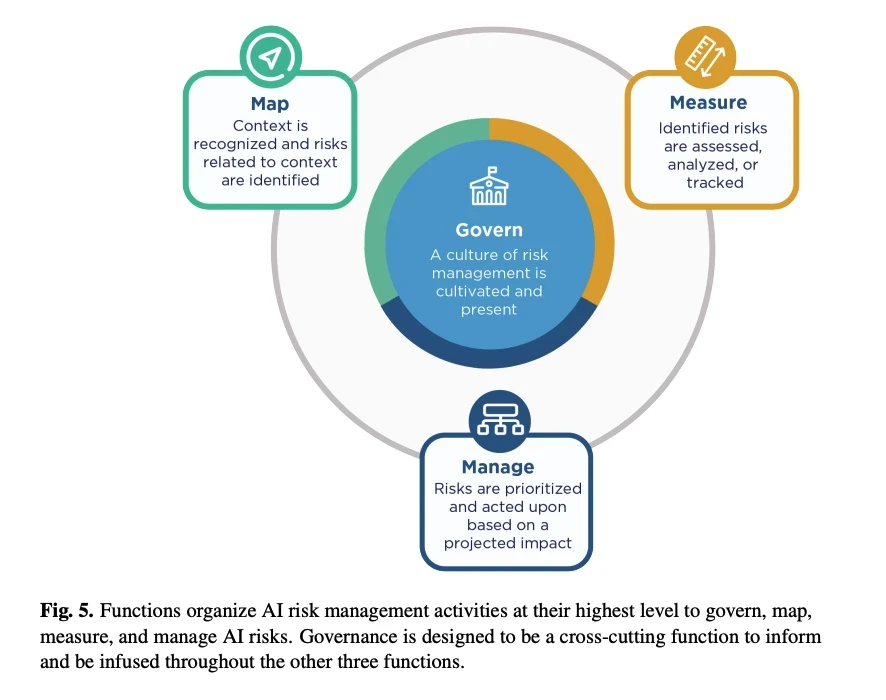

2) NIST AI Risk Management Framework (AI RMF)

What it is: A voluntary risk management framework (AI RMF 1.0, 2023) designed to help organizations manage AI risks and build “trustworthy AI.”

Core focus: Enterprise risk approach across the lifecycle-combining technical risks (robustness, privacy, security) and broader trust dimensions (transparency, accountability, fairness).

Key components: Four functions: Govern, Map, Measure, and Manage, plus a playbook and crosswalks/profiles (including GenAI-focused guidance).

Why Security teams use it:

A clean “program backbone” for ownership, lifecycle controls, measurement, and oversight

Works well as the umbrella that security, legal, and product can align on

Helps translate “AI security” from tool-level to enterprise risk posture

Gap: It’s intentionally high-level. You still need detailed threat catalogs (MITRE/OWASP) and concrete control libraries (Databricks/cloud frameworks) to operationalize it.

3) Cisco Integrated AI Security & Safety Framework

What it is: A broad taxonomy introduced in 2025 to unify AI security threats + AI safety harms in a single model by Cisco

Core focus: End-to-end risk coverage across the AI ecosystem: model threats, data/supply chain risks, tool-use risks, content harms, and agentic behavior.

Key components: A structured taxonomy designed to be usable by multiple audiences (executives vs. defenders vs. builders) and to reflect modern realities:

Risks span development → deployment → operations

Multi-agent / tool-using systems create new failure modes

Multimodal inputs expand the attack surface

Security events often lead directly to safety failures (e.g., injection → harmful output)

Why Security teams use it:

Helps avoid blind spots (especially around agent autonomy and content harms)

Useful when creating an enterprise AI risk register and control coverage map

Good framing tool for leadership conversations: “security + safety are connected.”

Gap: A taxonomy can become “too broad to act on” without prioritization. It needs a risk management backbone (NIST/ISO) and enforceable controls to become operational.

Join the session by Amy Chang presenting the Cisco Integrated AI Security & Safety Framework on Feb 24 at Aktonomy hosted by Akto.io .

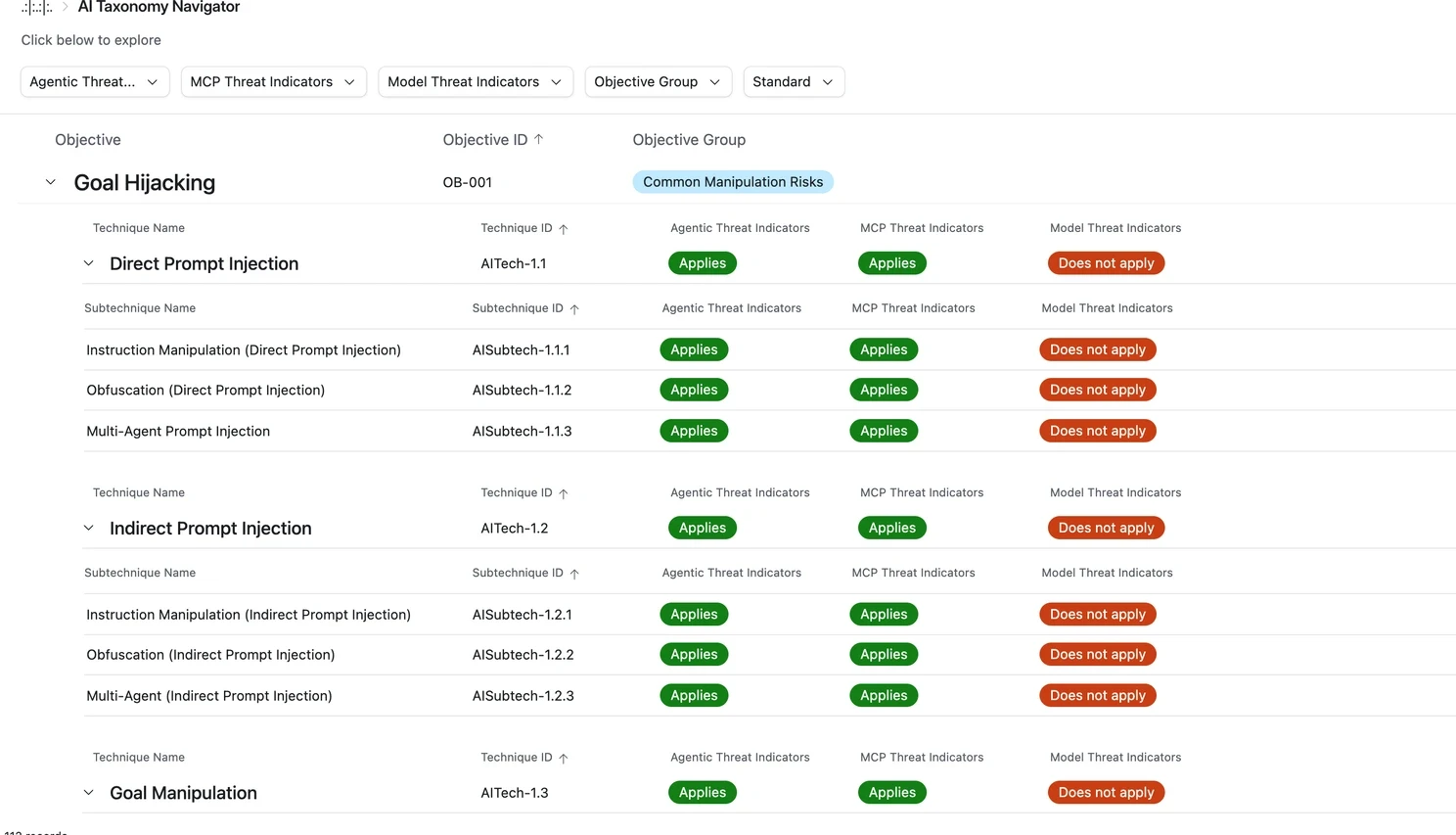

4) OWASP Top 10 for LLM Applications + Agentic AI

What it is: Two community-driven Top 10 lists from the OWASP GenAI Security Project : one for LLM apps and one for agentic AI.

Core focus: Practical application-layer vulnerabilities-what breaks when you ship LLM-based apps and agents into production.

Key components: Ranked risk categories with examples and mitigations. Typical themes include:

Prompt injection and insecure output handling

Sensitive data leakage

Supply chain risks (plugins, dependencies, connected tools)

Over-permissive tool access and privilege abuse in agentic workflows

Overreliance on model output without validation

Why Security teams use it:

Extremely actionable for AppSec: secure design reviews, test planning, and release gates

Works well as a minimum “AI AppSec standard” across product teams

Great for internal awareness and alignment between security + engineering

Gap: It’s a Top 10-by design it’s not comprehensive, not governance, and not a full control framework. It must be paired with lifecycle security and enterprise risk oversight.

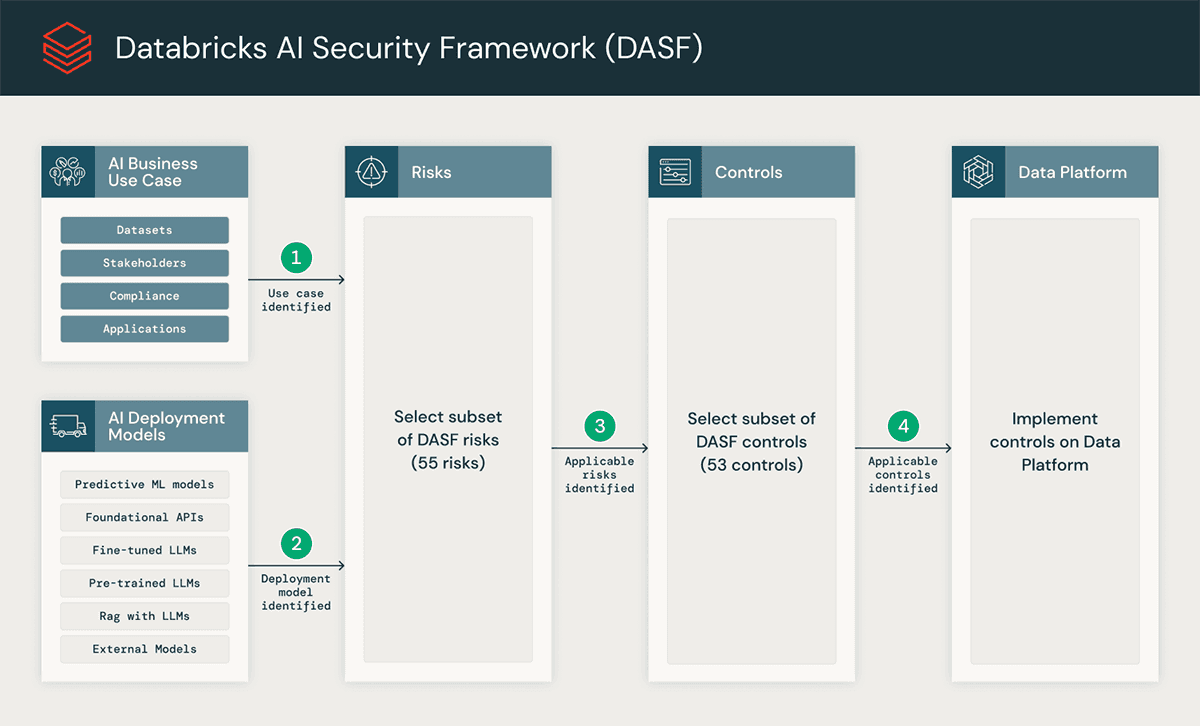

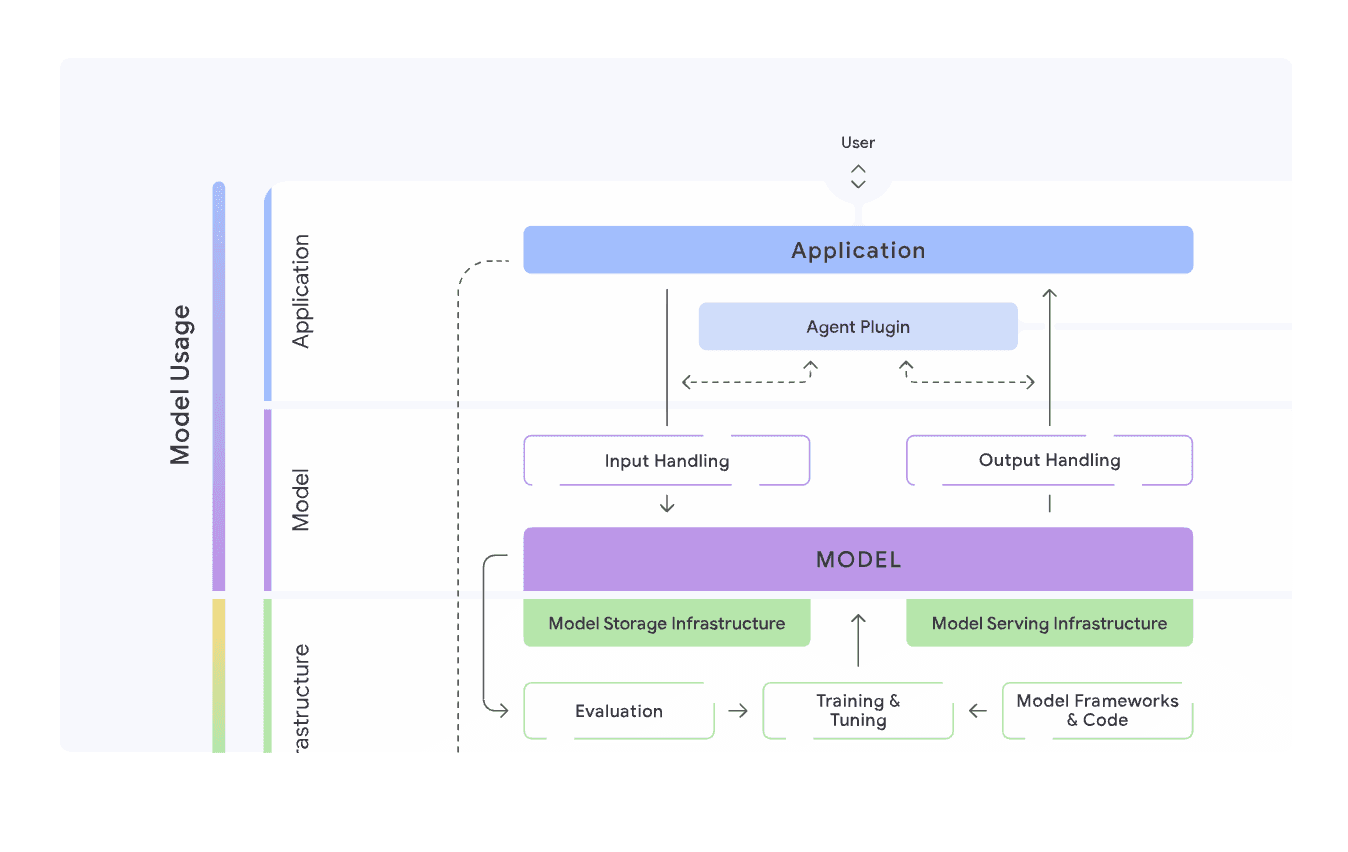

5) Databricks AI Security Framework (DASF)

What it is: A lifecycle security framework (notably DASF 2.0) built by Databricks as a practical playbook for securing AI systems end-to-end, originally shaped by the Lakehouse context but presented broadly.

Core focus: Hardening the AI/ML pipeline from data → training → deployment → monitoring with explicit risks and controls.

Key components:

Breaks AI into major lifecycle stages and components

Includes a risk/control matrix (e.g., dozens of risks mapped to recommended controls)

Provides crosswalks to other standards (useful for compliance alignment)

Why Security teams use it:

Strong bridge from “framework talk” to “what controls do we implement?”

Makes gap analysis practical (what are we missing in data governance, model management, serving security, monitoring?)

Useful for platform, data, and ML engineering alignment

Gap: Like most frameworks, the hardest area remains runtime control for autonomous agents and cross-platform enforcement. Also, breadth can overwhelm teams without prioritization.

6) Google Secure AI by Design (Google SAIF)

What it is: Google’s secure-by-design framework and principles (introduced 2023) meant to be adopted broadly, plus ecosystem work like CoSAI.

Core focus: Embedding security into AI from day one: security foundations, detection/response, automation, harmonized controls, feedback loops, and business-context risk.

Key components: Six principles emphasizing proactive security and continuous adaptation, plus resources and self-assessment guidance.

Why Security teams use it:

Strong “operating philosophy” to drive engineering culture change

Useful to structure lifecycle security expectations for AI teams

Encourages consistency across platforms and environments

Gap: Principle-driven rather than a detailed control catalog; it needs to be paired with specific threat lists and control frameworks to be fully actionable.

7) Cloud Security Alliance – AI Controls Matrix (AICM)

What it is: A vendor-neutral AI control framework from the Cloud Security Alliance, extending CSA’s cloud control model into AI.

Core focus: Operationalizing AI security by mapping AI risks to concrete controls.

Key components:

A structured control catalog covering AI data, models, pipelines, deployment, and operations

Mapping of AI risks to preventive, detective, and corrective controls

Alignment with existing enterprise security programs (e.g., NIST RMF, ISO 27001/42001, cloud security controls)

Designed to be used for gap analysis, architecture reviews, and audits

Why Security teams use it:

Bridges the gap between risk frameworks (NIST/ISO) and real security controls

Helps answer the practical question: “What controls do we actually need to put in place?”

Fits naturally into organizations already using CSA CCM or cloud security baselines

Useful for vendor evaluations and internal assurance reviews

Gap: CSA AICM focuses on what controls should exist, not on providing enforcement or runtime behavior management itself.

Bringing It Together: How Security Teams Should Use These Frameworks

A useful way to think about this is layered coverage:

Governance & accountability (the program backbone)

NIST AI RMF

Cloud Security Alliance AI Controls Matrix

Threat modeling and “what can go wrong.”

MITRE ATLAS

Cisco taxonomy (broad coverage)

OWASP Top 10 (app/agent focus)

Control implementation (what to actually build)

Databricks DASF

Google SAIF principles

Final Thoughts

The most important takeaway is: AI security is not a single control; it’s a system.

Governance without controls becomes paperwork. Controls without threat models become checkbox security. Threat models without monitoring become outdated the moment the model, tools, or prompts change.

A practical approach is to treat these frameworks as a stack:

NIST AI RMF for the risk operating model

CSA AICM + Databricks DASF for control definition and lifecycle coverage

MITRE ATLAS + OWASP + Cisco for adversarial realism and application/agent risk

Google SAIF to keep teams building “secure-by-design” from the start

From there, maturity comes down to execution: building a repeatable process for AI inventory, risk assessment, control mapping, testing (including red teaming), and continuous monitoring.

AI ecosystem will keep evolving. The winning security teams will be the ones that can adapt faster than the threat surface changes, and these frameworks, used together, are the best starting toolkit we have today.

Experience enterprise-grade Agentic Security solution