The State of Agentic AI Security 2025

Agentic AI Has Arrived And Most Organizations Haven't Secured It

Akash Vineet

Dec 8, 2025

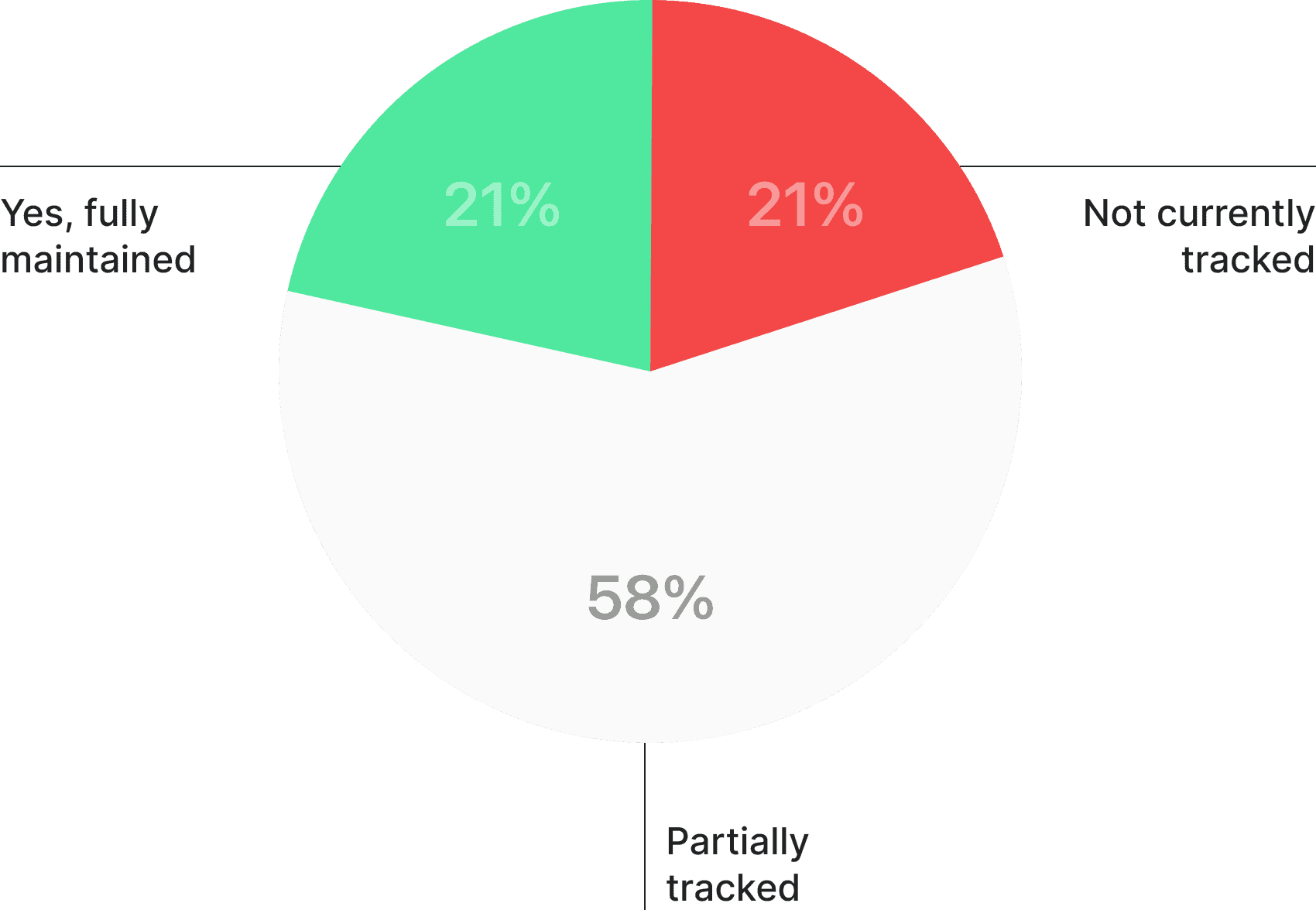

The State of Agentic AI Security 2025 survey reveals a critical disconnect: 69% of enterprises are deploying AI agents, but only 21% have the visibility needed to secure them.

As enterprises accelerate towards autonomy, AI agents are no longer experimental side projects, they’re becoming active participants inside business systems. They call APIs, move data, trigger workflows, and interact with other agents.

And with every new autonomous action, a new security surface is created.

In 2025, the story isn’t whether enterprises will adopt agentic AI. They already have.

The story is whether they can adopt it safely.

This blog distills the most important findings from The State of Agentic AI Security Report 2025, offering CISOs a clear view into where enterprises stand and where the next wave of security innovation must emerge.

Get the full State of Agentic AI Security 2025 Report

Agentic AI Adoption Has Outpaced Security Readiness

Organizations are moving fast, deploying agents across engineering, operations, customer support, and internal automation workflows.

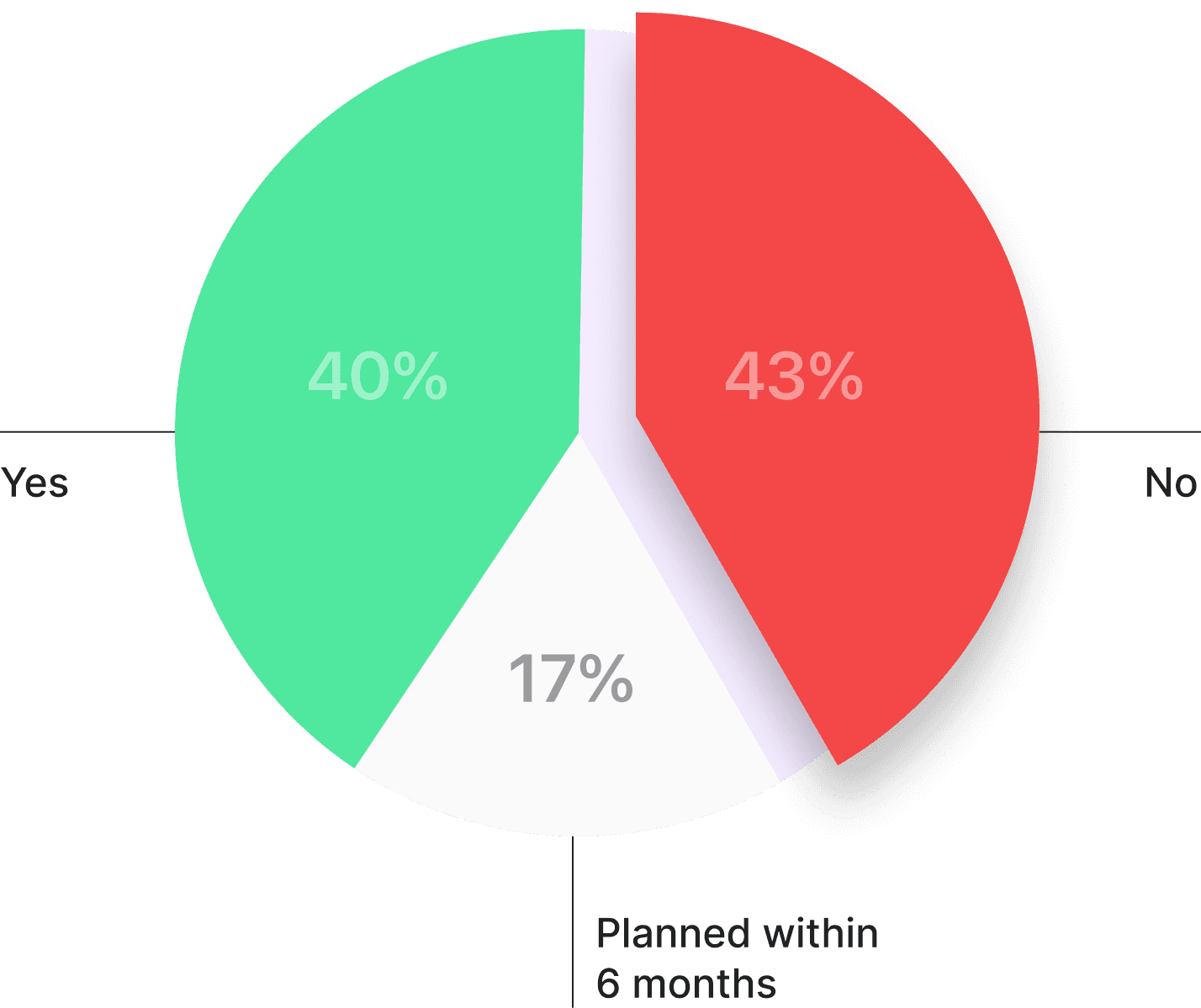

69% of enterprises are already piloting or running early production agent deployments.

This shift marks a clear inflection point: agentic AI is no longer an experiment; it is a security surface.

But adoption has outpaced visibility, governance, and foundational risk controls, creating an exposure gap that grows wider every quarter.

The Visibility Crisis: You Can’t Secure What You Can’t See

The report reveals a single overwhelming bottleneck:

Only 21% of organizations maintain a fully up-to-date inventory of agents, MCP servers, tools, and connections.

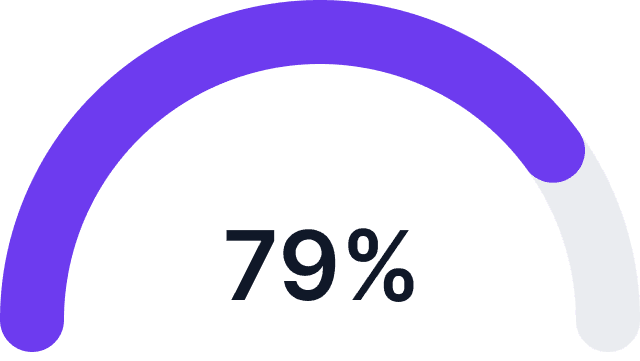

This means 79% of enterprises operate with blindspots, where agents invoke tools, touch data, or trigger actions that the security team cannot fully observe.

Without inventory, organizations cannot answer basic questions:

What agents exist?

What can they access?

What tools can they invoke?

What data do they touch?

Which connections are active?

As Henri Du Plessis, Managing Security Engineer, from Toyota Connected North America told us: "My biggest concerns are visibility and the growing gap between rapid AI development and the security tooling meant to protect it"

Untracked agents lead to untracked actions and untracked actions are the real risk.

Governance Gaps Are Magnifying the Risk

Visibility isn’t the only foundational weakness.

79% of organizations have no formal governance policy for AI agents or MCP connections.

Without policy frameworks, there are no consistent standards for agent permissions, no identity requirements, no approval workflows for tool onboarding, and no baseline expectations for monitoring.

Organizations are effectively allowing autonomous systems to operate inside undefined trust boundaries.

Without inventory, governance cannot begin, and without governance, autonomy becomes a liability.

The Threat Model Has Shifted: It’s About Actions, Not Prompts

Early this year, AI security conversations centered on prompts, how they could be manipulated and how they shaped model outputs. Agentic AI breaks this paradigm entirely.

Agents now take actions, not just generate text. They can:

Today's agents can:

Issue API calls that modify or delete data

Trigger multi-step workflows across enterprise systems

Invoke external tools and services

Escalate privileges through chained actions

Interact with other agents invisibly

The report makes this shift clear:

“The new risk surface is what an agent can do, not what it can say.”

And confidence is low:

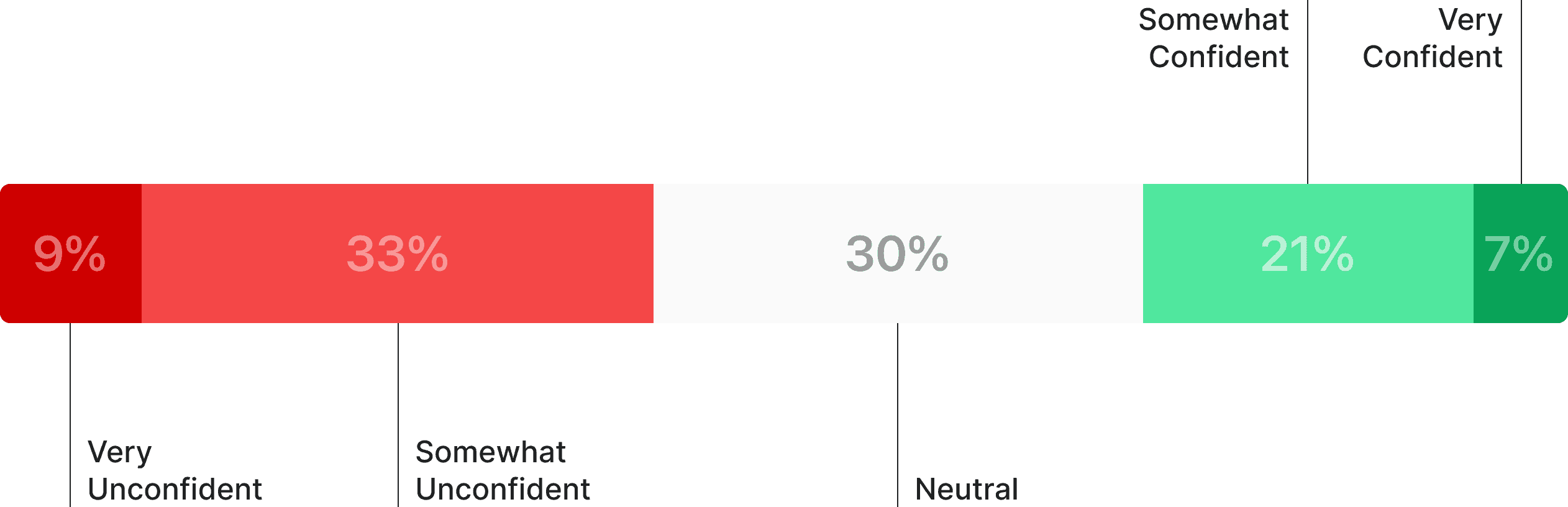

42% of practitioners are unconfident in their ability to secure agent-to-system interactions.

Autonomous execution is where real-world impact begins, and existing controls were simply not designed for this behavior.

The Six Most Concerning Threats for CISOs in 2025

Based on survey responses, six threat categories emerged as the highest-impact risks:

1. Supply Chain Risks (LLM plug-ins, MCP integrations)

2. Data Leakage Through Autonomous Actions

3. Prompt Injection → Unsafe Execution

4. Uncontrolled Autonomy & Recursive Agent Loops

5. Regulatory Exposure From Opaque Decisions

6. Agent Impersonation or Spoofing

These are not hypothetical. They already appear in early deployments, often before organizations notice them.

The Readiness Gap: Most Organizations Lack Core Safety Controls

A striking data point:

60% of organizations have not conducted an AI or agentic risk assessment in the last 12 months.

Meanwhile:

Only 38% monitor AI traffic end-to-end (prompts, tool calls, outputs).

Only 41% have runtime guardrails in place.

Only 17% continuously monitor agent-to-agent interactions.

And the most concerning finding:

Nearly 20% of organizations have no controls at all, no monitoring, no guardrails, no enforcement.

Autonomy without guardrails isn’t innovation. It’s incident creation.

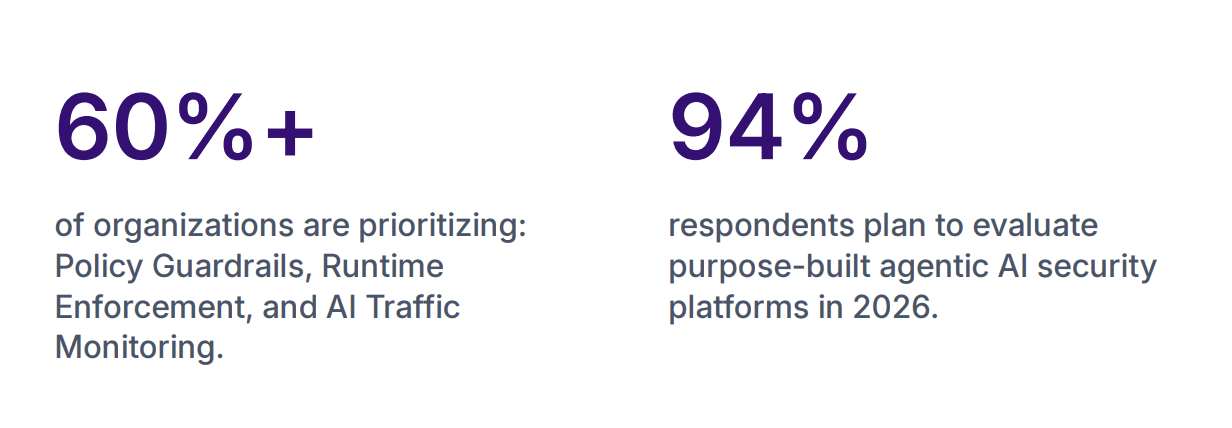

Additionally, 30% rely on homegrown scripts or custom tooling a clear signal that the market lacks mature solutions. As Krantikishor Bora, Director - Information Security Risk, at GoDaddy emphasized: "Guardrails are essential for Agentic AI Security-they must be thoroughly verified, rigorously tested for their intended purpose, and strictly enforced to ensure compliance."

CISO Priorities for 2026: A New Control Plane Is Emerging

Security leaders are no longer treating agentic AI as a prompt-level problem. They’re treating it as an identity, access, and action-control challenge, similar to IAM, Zero Trust, or network segmentation.

What leaders want is clear: predictable, controllable, auditable agents. They need full action logs, strict execution boundaries, sandboxing, strong identity enforcement, default PII redaction, and the ability to block unsafe actions before impact.

As Venkata Phani Patelkhana, Technical Software Architect, at Dell told us: "Confidence in adopting agentic AI securely starts with governance - test every agent, enforce guardrails, and monitor continuously to ensure trust and resilience"

Securing Autonomous AI Starts Now

Agentic AI introduces a new operational model, one where actions, not prompts drive risk.

Organizations moving fast without an inventory, without governance, and without real-time controls are inheriting silent, compounding exposure that will be far more costly to unwind later.

2025 is the year enterprises realized:

Agents aren’t just users of systems. Agents are systems.

And systems need a security layer.

The enterprises that establish agent inventories, privilege policies, and runtime controls today will be the resilience leaders of tomorrow. Those that delay will find themselves managing autonomous systems operating beyond their security team's line of sight.

The governance layer is no longer an optional add-on to AI security. It's the control plane that determines whether autonomous systems operate safely or become new sources of systemic risk.

Download the Full Report

Get the full State of Agentic AI Security 2025 Report

Benchmark your organization's agentic AI security maturity

Understand the complete threat landscape and how peers are responding

Access detailed recommendations for CISOs entering 2026

Discover how leading organizations are building governance frameworks

The report includes insights from 100+ verified security and AI leaders across organizations spanning technology, financial services, healthcare, manufacturing, and telecommunications.

Important Links

Experience enterprise-grade Agentic Security solution