LLM Guardrails for AI Security: Types, Principles, and Best Practices

Learn what LLM guardrails are, why they are critical for AI security, and the best strategies to implement guardrails for secure and reliable LLM applications.

Bhagyashree

Jan 21, 2026

Building a solid ground for guardrails ensures that LLM your business uses does not just perform well on paper but thrives securely and effectively in real world applications. While LLM evaluation prioritizes on refining accuracy, relevance and overall functionality, adopting LLM guardrails is about actively mitigating risks in real-time production environments.

This blog explores what is LLM guardrails and best strategies to implement LLM Guardrails

Image Source: Leanware

What are LLM Guardrails?

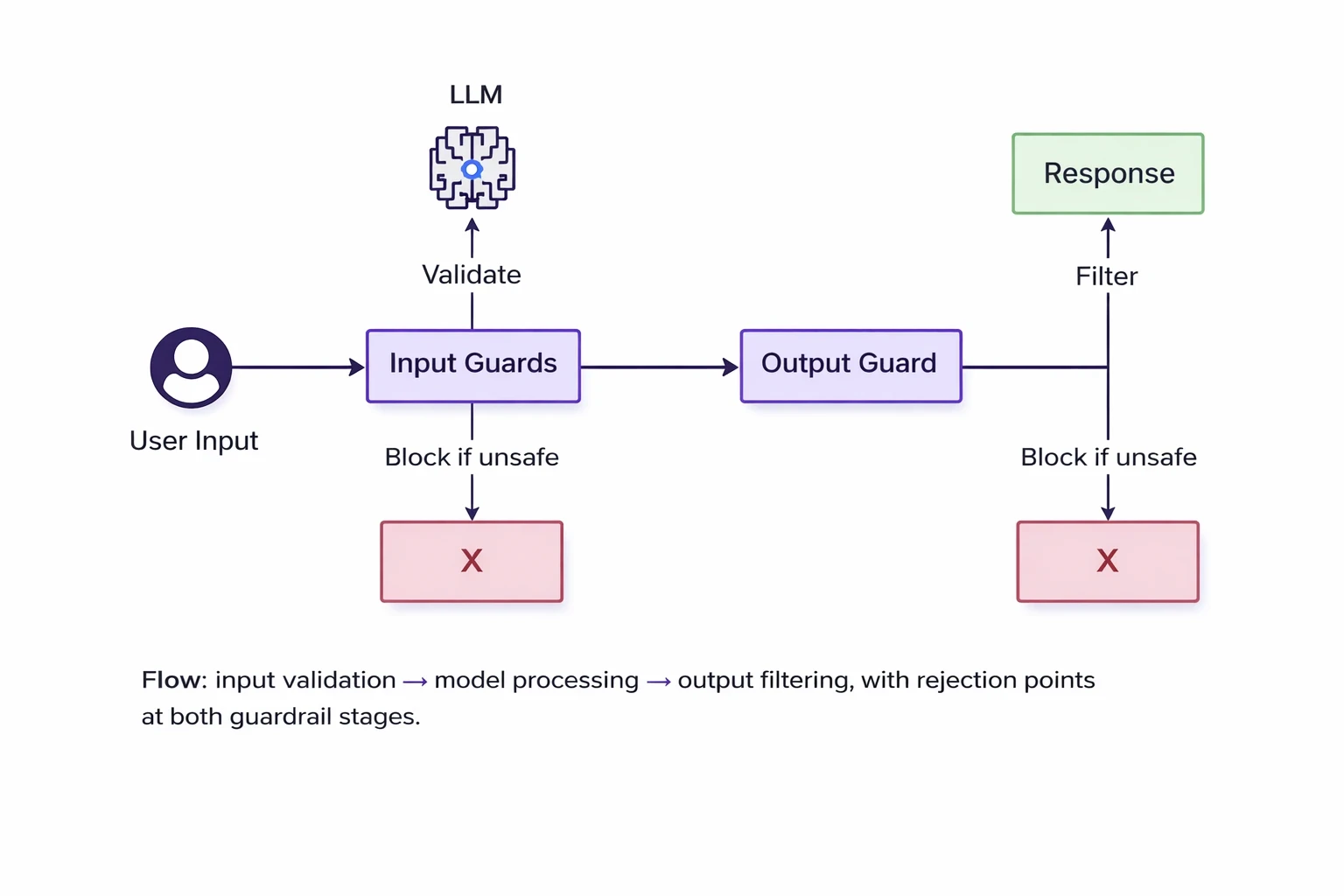

LLM Guardrails are predefined safety rules that secures LLM applications from risks like data leakage, bias, hallucinations and malicious attacks such as jailbreaking and prompt injection and jailbreaking. Guardrails are created of either input or output safety guards, where each of them represents a unique safety criteria to safeguard your LLM against.

LLM guardrails work in real-time to either capture malicious user inputs or screen model outputs. There are different types of guardrails that specializes in different type of harmful input or output.

Why are LLM Guardrails Important

LLM guardrails acts as a critical line of defense across various threat vectors in LLM-powered systems:

Data Protection: Guardrails helps prevent LLM from leaking sensitive information, internal training data, protecting both enterprise and personal data.

Prevention of exploitation: Guardrails prevent LLMs from being used for malicious purpose like writing ransomware scripts, recommending banned substance or enabling scams.

Secure open-source deployment: Open source models like LLaMA or other platforms can be updated or deployed privately. However, guardrails are required to ensure their outputs remain secure.

Attack surface reduction: Guardrails restrict how users can exploit AI models through inputs that minimizing attack vectors like prompt chaining etc.

Types of LLM Guardrails

There are 5 types of LLM Guardrails you should be aware of.

1. Input Guardrails

Each LLM platform offers input filters built to scan user submitted prompts for harmful content before they reach the LLM. These filters consist of.

Ethical Guardrails - Ethical guardrails enforce strict limitations to prevent biased, discriminatory or harmful output and make sure that an LLM complies with proper moral and social norms.

Security Guardrails - Security guardrails are designed to secure against external and internal security threats. They focus on ensuring model which cannot be manipulated to spread misinformation or disclose sensitive information.

Compliance Guardrails - Compliance guardrails makes sure the outputs generated by the LLM align with legal standards, which includes data protection and user privacy. They are frequently used in industries where the regulatory compliance is crucial like finance, healthcare and legal service.

Adaptive Guardrails - Adaptive guardrails keep evolving alongside a model, which ensures continuous compliance with legal and ethical standards as the LLM learns and adapts.

Contextual Guardrails - Contextual guardrails helps in fine tuning the LLM’s understanding of what is relevant and acceptable for its specific use case. They help prevent the generation of inappropriate, harmful or illegal text.

2. Output Guardrails

The LLM platforms also include output filters that conducts scanning of LLM generated responses for harmful and restricted content before it is delivered to user. These filters include.

Language Quality Guardrails - Language quality guardrails demands LLM outputs to meet high standards of clarity, coherence and readability. They ensure that the text produced is relevant, linguistically accurate and free from errors.

Response and Relevance Guardrails - LLM should meet users intent after passing via security filters. Response and relevance guardrails verify that models responses are focused, accurate, and are aligned with user’s input.

Logical and Functionality Validation Guardrails - LLMs are required to ensure logical and functional accuracy along with linguistic accuracy. These specialized tasks are handled by Logic and functionality validation guardrails.

Principles of LLM Guardrails

Here are some principles of LLM Guardrails.

Restricted Enforcement: Define clear limits on acceptable inputs, model behaviors and outputs to ensure security, safety and regulatory compliance.

In-Depth Defense: Add guardrails before, during and after prompt processing which covers input validation, prompt construction and output filtering.

Secure Prompt Construction: Secure system prompts by injecting structured logic (roles, permissions, formatting) which resists manipulation and implements RBAC.

Output Safety and Integrity: Filter responses to prevent toxic content, sensitive data leakage and exposure system prompt, enforce schemas and repair harmful outputs.

Controlled Model Behavior: Put controls what the model can discuss and explicitly instruct it to ignore attempts to modify core instructions.

Strategies to Implement LLM Guardrails

Here are many strategies to implement LLM guardrails.

Secure Against Prompt Injections and Jailbreaks

Use input sanitization like tag stripping, regex, encoding normalization and length limits. This must be combined with AI-based detection to find and neutralize sophisticated attacks.

Validate Outputs

Add output guardrails to check relevance, schema compliance and supported formats. Replace or block responses that drift outside the application’s domain.

Maintain Least Privilege and Role Isolation

Enforce user identity, roles and permissions to every LLM request. Ensure tools and retrieved data are accessible only within the user’s authorized scope.

Prevent tool Misuse and Privilege Escalation

Gate tool invocations with authentication, schema validation and permission checks. Reject outputs that would expose unauthorized data or sources.

Enforce Clear Limits

Filter harmful or off-domain inputs using static rules and ML based intent detection to ensure that model handles only tasks within its defined scope.

Final Thoughts on LLM Guardrails

By effectively following the best practices of implementing guardrails and regularly upgrading these safeguards, security teams can take advantage of the full potential of AI agents while safeguarding users and maintaining trust. With Akto AI Agent Security, automatically discover and catalog MCPs, AI agents, tools, and resources across your infrastructure, get real-time alerts and block attacks such as prompt injection and more in real-time. Guardrails enable your security teams to build safe and reliable AI Agents with by defining policies, enforcing compliance, and preventing unwanted actions in real time via Akto’s AI Guardrail Engine.

Book a demo right away to explore more on Akto's Agentic AI and MCP security.

Experience enterprise-grade Agentic Security solution